The microphone has changed and evolved, the ear has not. We have updated this blog to reflect the advances in microphones for the same old ears. Blog updated on 11 /15 / 19.

Two Ears Needed

Binaural hearing is the name for our hearing process that involves the use of two ears. It is a complex system that must be able to recognize small variations in the intensity of the sound along with other spectral information. This ability to assess intensity, spectral information and timing cues quickly assists humans in sound localization. Let’s look at the difference between ear and microphone or let’s compare the ear and the microphone.

Sound Localization

The mechanism we use for sound localization has three main components. These three mechanisms can be visualized using a three-dimensional positioning paradigm. There is the azimuth, or the horizontal plane that lies ahead of us, the vertical angle or azimuth and its elevation or attitude towards us, and the velocity or speed of sound for sound sources that are moving. We can also assess distance intervals for stationary sound sources. The azimuth of a particular sound source is calculated by our brains by analyzing the variations in arrival times at our ears. The amplitude of the signal especially at higher frequencies is from the reflections off of our bodies.

Distance Cues

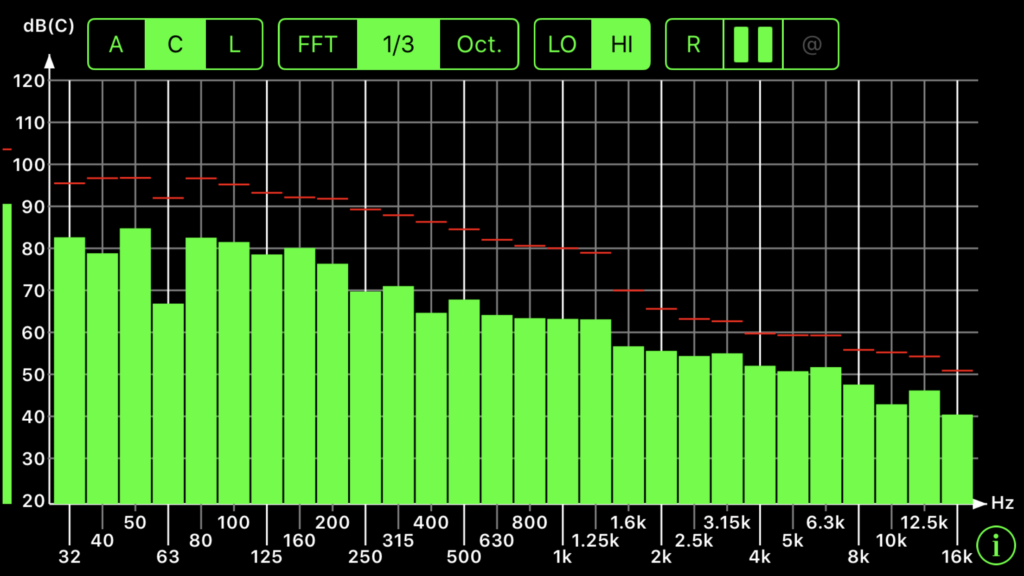

Our distance cues are calculated by our brains based on loss of amplitude, the loss of higher frequencies, and that familiar concept in small room acoustics known as the ratio of direct sound to reflected sound within our room. Our head employs sound barrier technology to change the timbre, intensity, and spectral characteristics of the sound in order to facilitate sound localization. These subtle differences between our ears are known as interaural clues. These processes work well with middle and high frequencies. Lower frequencies that have much longer wavelengths, diffract the sound around our head, forcing our brains to focus on the phase of the signal. There is a difference between the ear and the microphone. Let’s compare the ear and the microphone on how they both react to all sonic issues.

Haas Effect

Helmut Haas has been attributed with discovering the Haas effect. The Haas effect simply put states that we can determine the localization of the sound source despite all the reflections from surrounding surfaces. This precedence effect was measured to a very low level of milliseconds and states that even a 1 ms. difference between the arrival times of direct and reflected energy can be perceived by humans and even this small signal timing difference can be detected and used to determine sound source localization.

Lateral Sound

Lateral sound source direction is analyzed by measuring the time and level differences. When sound strikes the right ear before the left ear, the brain is able to measure that small arrival time difference. It is also capable of assessing phase delays at low frequencies and group delays at higher frequencies. The sound that arrives at our right ear has a higher level than sound at our left ear because the head blocks the left ear. These levels are frequency-dependent and increase as the frequency rises.

Time And Level Cut Off

The cut-off frequency for this time and level difference is 800 cycles. Frequencies below 800 Hz. are where time differences are evaluated. These are phase delays. Frequencies above 1,600 cycles is where interaural level differences are evaluated. The frequency range from 800 Hz. – 1,600 Hz. has both level and time used together. The accuracy of both time and level at these frequencies is 1 degree for sounds in front of the listener and 15 degrees for sounds to the sides of the listener. Time differences can be measured by our hearing system at the 10ms. interval.

Low Frequency Energy

Low-frequency energy is also governed by the physical properties of the ear. The dimensions of most human heads are less than 1/2 wavelength. This leaves the ears free to process phase delays between our ears without much sonic confusion. When the frequency drops below 80 cycles, it becomes harder to use time and levels as measurements for localization. I suspect this 80 cycle number is the basis for acoustical people to say that one can not really measure low frequencies below 80 cycles in our room.

Critical Bands

Our ears and brain form our auditory system. Our ears are our microphones and they feed data to our brain or processor. All of this processing occurs within a critical band of frequencies. This hearing and processing critical band from 20 Hz.- 20,000 Hz. in broken down even further into 24 critical bands. For sound localization, all the information within this critical band is analyzed.

Octave Bands

Cocktail Party Effect

We can process a single sound source within a room full of competing sounds. This is the definition of the “cocktail party effect”. Our signal processing system comprised of the ears and brain simple turns up the gain on the sound location we are interested in. It can increase the gain by up to 15 dB in selected locations. Can our microphones perform like our two ears?

Microphone Hearing

Microphones can “hear” at lower and higher frequency ranges than our human hearing. They can receive information that is lower than we can “hear” and much higher. Current thought is that microphones that can record information that is above our hearing range are that this higher frequency data adds something to our music presentations. Some microphones claim to work out into the 40,000 – 50,000 cycle range. Even though we can not hear that data, the claim is that it “adds” something to our recordings. If we can not hear it, what does it add? The only thing it adds is more cost to the gear. If we can’t hear it, why should we pay more for it?

Time Differences

It would be difficult for microphones to hear time differences unless one was using multiple microphones. They would need something to compare it to as a reference. This is similar to our ears and the transfer function that occurs with them, but not the same. Our ears measure these differences quickly with only our heads in the way. Microphones require a controlled environment where baseline measurements can be made and used for statistical comparisons without extraneous variables such as those found at a cocktail party.

Quadratic Diffusion In Brenda’s Vocal Studio

Cocktail Party Microphone ?

I was laughing at the cocktail party effect because I was wondering how a microphone would perform at a cocktail party. If it was to focus on a single sound source within the party, it would have to be very directional and not be impacted by reflected energy. One would probably have to use barrier technology around the microphone to isolate it from all the surrounding noise. That would not be acceptable behavior at a cocktail party, although more acceptable to me than most behaviors I see at these parties. Can you imagine a microphone with barriers moving towards someone at one of these parties?

Sound Pressure Levels

Levels of sound pressure are an easy thing for microphones to measure. I think they all can do this quite easily. Measuring all the other variables at the same time is more difficult. They are all dependent on different polar patterns. A polar pattern is the microphones pick up pattern. It is the physical area that the microphone “hears” in. There are three main patterns: cardioid, figure eight, and omnidirectional.

Microphone: https://en.wikipedia.org/wiki/Microphone

Microphone Types: https://www.sweetwater.com/insync/studio-microphone-buying-guide/#mic_types

Cardioid

Cardioid microphones pick up sound energy that is directly in front of them. They can pick up some side energy but no rear energy. Their main emphasize is at a 60-degree angle out of the microphone position. This pattern is created by permitting information to reach the back of the microphone out of phase. It gets more directional at higher frequencies and more omnidirectional at lower frequencies. This creates a varying frequency response from different angles.

Cardioid Microphone

Figure Of Eight

A figure of eight or bi-directional microphones pick up information equally from the front and rear of the microphone. Each side picks up the opposite phase of the other, not out of phase because air is pushing it from opposite directions.

Omni Microphone

Omnidirectional

Omnidirectional or Omni picks up information from all directions. It picks up an even balance of frequencies from all directions. They pick up all sounds within the recording space, so room sound with a good acoustical environment is important.

Ears

The ear has many attributes that a microphone does not. They also have some similarities. Our ears can localize sound in three different planes at the same time. They can asses vertical and horizontal along with the intensity of the sound coming at us. Motion and loudness are immediately assessed and distance from sound source can be estimated. Distances can be judged by the back and forth interplay of sounds between our ears. This works well for middle and high frequencies. Low frequencies diffract around our heads and force us to focus on phase.

Microphones

Microphones operate at similar critical bands as microphones but certain microphones can handle low frequency much better than human hearing. A Neumann TLM 102 can handle a 144 dB level of pressure. That would destroy a human ear. Microphones need three different types of polar patterns to do what the human hearing system does with two ears. I like the microphone and my ear to be used together. That is the best of both worlds.

In Summary

I hope today’s discussion helps solve the problem you are having. Please message me at info@acousticfields.com if you have any questions as I am always happy to help. If you want more to learn more about room acoustics please sign up for our free acoustic video training series and ebook. Upon sign up you will instantly have access to a series of videos and training to help improve the sound in your studio, listening room or home theatre.

Thanks

Very helpful information :) i want more about difference between ultrasound and infrasound i am much more interested in these kind of knowledge.

E, This is a forum on small room acoustics. The information you are requesting is beyond this scope. I would suggest you use the internet for further research on your topics of interest.